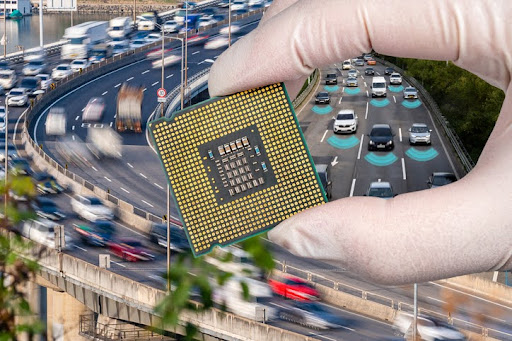

Microprocessors in Autonomous Vehicles

The automotive industry has grown rapidly over the past century to the point where we now have autonomous vehicles. Be it roads, oceans, or airspace, this new type of vehicle has become common. These autonomous vehicles are intelligent and rely little on humans for their operations.

Microprocessor chips within vehicles are used to transfer information among different components for various purposes, such as communication, sensor control, and decision-making.

In this article, we will discuss how information is processed in autonomous or AI-defined vehicles, the best practices for programming microprocessors in autonomous vehicles, and the challenges of using microprocessors in autonomous vehicles. But first, let’s see how autonomous vehicles work.

Autonomous Vehicles’ Functionality And Sensors Used Within Them

A complex network of cameras and sensors enables autonomous driving by recreating the external environment for the vehicle. The information that is collected with the help of radar, LiDAR, sensors, and cameras gives the vehicle all the required details about its surroundings, such as pedestrians, curbs, approaching vehicles, traffic signals, etc.

With that in mind, let’s look at some key functionalities and understand the role sensors play within them.

Real-time Data Processing

A huge amount of data needs to be processed in autonomous vehicles in real time. This is because the surrounding environment of an autonomous vehicle is constantly changing. Microprocessors help the vehicles process the information and decide what speed to move at, where to stop, and where to take turns.

Safety-critical Features

Microprocessors also enable the safety-critical features in autonomous vehicles. The autonomous vehicles must adopt “fail-operational” functionality for complete safety while driving. These features, such as the emergency braking system, work effectively because of the high processing speed of microprocessors.

Cameras

Autonomous vehicles become aware of their surroundings with the help of cameras. They help in object detection, lane tracking, parking assistance, color information, and blind-spot monitoring. Detection of moving objects is done by a monocular or one-sided vision sensor in the camera. Meanwhile, the stereo vision sensor makes the camera act as a human eye and determines things like an object’s depth.

LiDAR

LiDAR, or “Light Detection and Ranging,” is used in traffic jams and automatic emergency braking (AEB). Its main use is 360-point detection with high accuracy and resolution. Unique point clouds, which are a three-dimensional collection of points, are produced by a series of pulses, enabling them to produce 3D images of the objects.

Radar

Radar is similar to LiDAR but uses radio waves instead of light waves to perform its function. It calculates the distance, velocity, and angle of an approaching object or obstacle around the autonomous vehicle. The time the radio waves take to return from the object is used to make all these calculations.

Ultrasonic

This sensor is used for automatic cruise control (ACC), collision avoidance, parking assist, distance sensing, and weather resistance. These sensors are more reliable than the camera sensors alone, especially in conditions where visibility is low. They can also be equipped with AI sensors, which improve their ability to perceive and assess the environment and plan appropriate actions.

Sensor Fusion in Autonomous Vehicles

Sensor fusion is a process in which data from multiple sensors is merged to remove uncertainty from decision-making. The five levels of sensor fusion include:

- Level 1: The first fusion level includes automated feature extraction and digital signal processing for pre-object assessment. Hybrid target identification, unification, variable level of fidelity, and image and non-image fusion happen in the first level.

- Level 2: In the second level, hybrid target identification, image and non-image fusion, and variable levels of fidelity happen.

- Level 3 & 4: The fusion of cognitive-based modulations and automated sections of knowledge representation is done in the third and fourth levels.

- Level 5: The fifth and last level is called process refinement, and the fusion of measures of performance, measures of effectiveness, and non-commensurate sensors occurs at this level.

Best Practices for Programming Microprocessors in Autonomous Vehicles

Programming microprocessors in autonomous vehicles requires creativity, technical knowledge, and safety awareness. This skill is both challenging and rewarding. The following are some of the best microprocessor programming practices used for autonomous vehicles.

Selection of Hardware and Software Platforms

The first step in programming microprocessors in autonomous vehicles is selecting appropriate hardware and software platforms. While selecting hardware and software, you must consider budget, project goal, and specifications.

A microprocessor’s physical component is called its hardware, which includes input and output ports, memory, communication interfaces, and CPU. The programming language, libraries, operating systems, and tools run on microprocessors and are called software.

Some of the factors that should be considered when selecting suitable hardware and software for microprocessor programming include compatibility, power consumption, scalability, performance, security, and reliability.

Code Structure and Design

The design and structure of the code are the next steps in programming microprocessors in autonomous vehicles. The code should be designed to be read, maintained, and reused easily. Principles such as abstraction, documentation, modularity, and encapsulation are all part of the code structure and design.

Testing and Debugging

The third step of microprocessor programming in autonomous vehicles is testing and debugging the code. This is done to ensure the code meets the requirements and works as expected. Tools such as breakpoints, emulators, watchpoints, oscillators, logic analyzers, debuggers, and simulators verify the code’s performance, functionality, and accuracy.

Legal and Ethical Standards

The last step in this process is following the legalities and ethics that administer the development and deployment of autonomous vehicles. These standards are the regulations and guidelines that assure the stakeholders’ rights, values, and responsibilities are respected by the designed code.

These stakeholders can be regulators, users, manufacturers, and society. Accountability, safety, transparency, and privacy are some aspects that should be kept in mind while programming microprocessors in autonomous vehicles.

Conclusion

Microprocessors play an important role in the operation of autonomous vehicles. From collecting data to processing and fusion, microprocessors ensure the smooth functioning of autonomous vehicles. People are often unaware of where to get microprocessors from because not much information is available on these electronic parts. Partstack is the ultimate platform that offers quick and smooth purchase and sale of electronic components.